Data Ingestion

What is Data Ingestion?

Before data can be processed or analyzed, it must be ingested by an application program, data integration platform or database management system. All applications operate in three phases: data ingestion, processing, and output.

Data Ingestion in Data Warehousing and Data Science

Data warehouses and machine learning perform data analysis using data that must be extracted from one or more source systems. Getting the data to the analytics database uses data preparation and ETL processes. Data preparation pipelines ingest data before moving it to target analytic systems. Similarly, ETL, which stands for Extraction, Transformation and Load processing, includes data ingestion when extracting data from source data systems and loading transformed data into an analytics database.

Examples of Data Ingestion

Ingestion of Parameters by Application Programs

Application programs, functions and microservices get data passed to them when invoked or called. The SUM function may have a string of numbers passed to it, which it adds together to return a total value. More modern application programming interfaces (APIs) employed by web applications can be interrogated to ease data ingestion. JSON and XML allow variable numbers of elements to be passed along with a declared delimiter string.

Data Entry

Data can be validated as humans enter it in forms before an application program accepts it. Manual data entry is commonly used today to collect survey data, for careers to record medical data and for online forms.

Ingesting Transaction Records

ERP systems such as Oracle and SAP create journal records to record transactions. Batch systems ingest this data to summarize daily transactions for reporting and end-of-day reconciliation.

Log Data

IT systems like websites record visits by logging URLs and cookie data. Marketing and Sales automation systems such as HubSpot ingest this data and use it to map these URLs to corporations and match cookie data to existing prospect lists.

Cloud-Based Data Ingestion

Cloud-based storage such as AWS S3 buckets emulate on-prem operating system file access paradigms and present familiar APIs so applications can transparently ingest cloud data as if it were locally resident.

Real-Time Data

Gaming and stock trading systems tend to bypass file stem APIs, preferring to ingest data directly from streamed in-memory message queues.

Ingesting Database Records

Database systems operate by accepting and parsing queries written in SQL or using key values and returning a result set of records that match the selection criteria. Records are then processed one at a time by the calling application.

Loading Data into a Database

Most database vendors provide fast loaders to bulk load data using multiple parallel streams or bypassing SQL to get the best throughput.

Streaming Data Ingestion

A popular alternative to traditional file-based data ingestions is streaming data sources such as AWS SNS, IBM MQ, Apache Flink and Kafka. As new records are created, they are immediately made available to applications that subscribe to the data stream.

Edge Data Ingestion

IoT devices generate masses of data that would overwhelm corporate networks and central server capacity. Gateway or edge servers ingest sensor data, for example, discard the less interesting data and compress the interesting data before transmitting it to central servers. This is a form of pre-ingestion to optimize resource utilization and increase data throughput over busy networks.

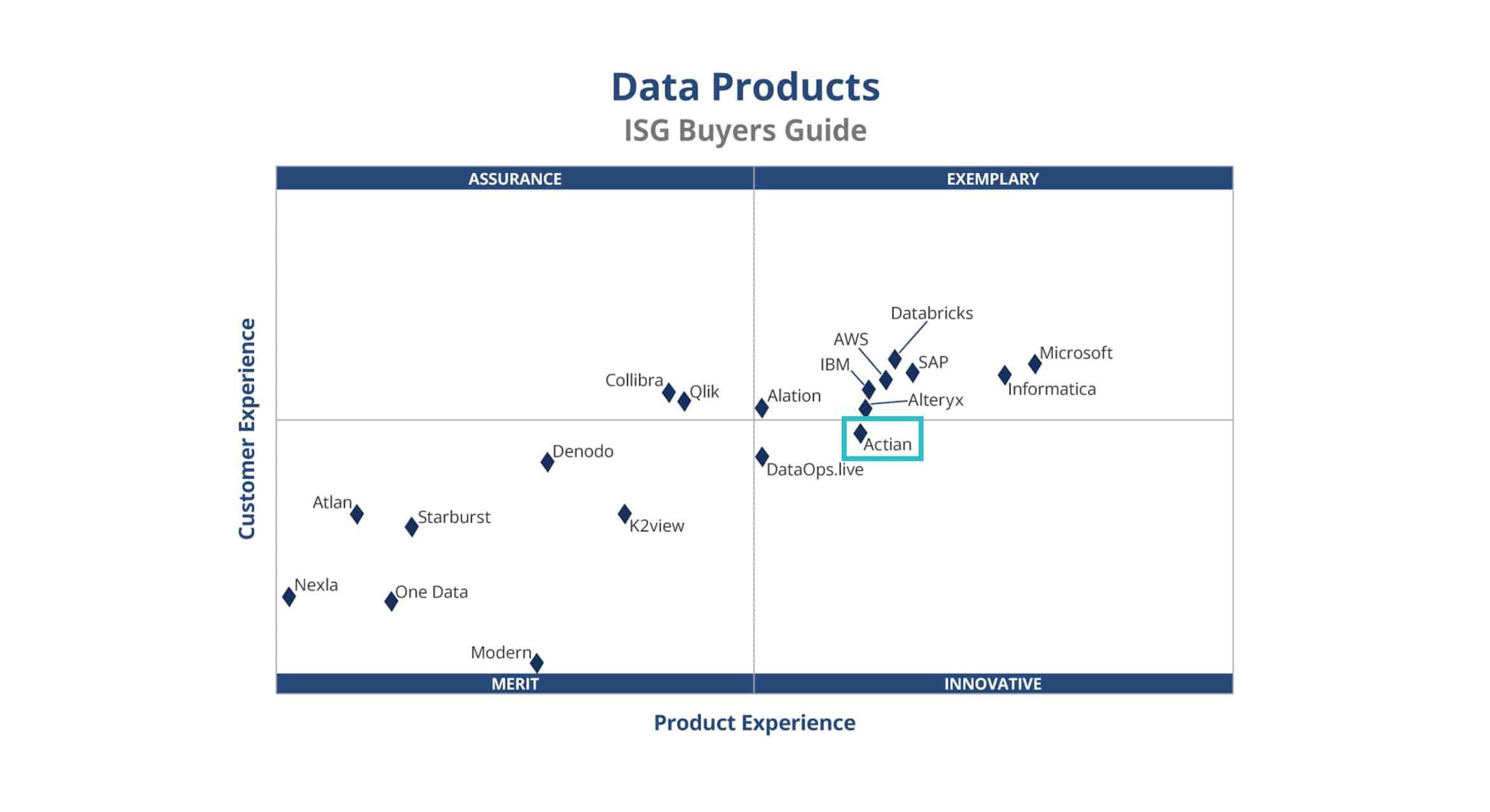

Actian and Data Ingestion

The Actian Data Platform manages your data assets from edge to cloud. Built-in data integration eases data ingestion by providing pre-built connectors to hundreds of data sources to manage the data pipeline from source data ingestion to visualized insights. Actian DataConnect supports file-based and stream-based ingestion from JMS, Kafka, MSMQ, RabbitMQ and WebSphere MQ. Centralized management of data integration provides centralized orchestration of data pipelines. Data analytics uses highly parallelized query execution for fast analytics.

Download self-managed database instances running on-prem or use the Actian Data Platform on AWS, Google Cloud and Microsoft Azure public clouds.