It’s Back to the Future for Flat Files – Part 3

Actian Corporation

November 25, 2019

Why Embedded Software Application Developers Should Dump Flat Files Before They Have to Run Dump on Them

I wrote an initial blog a few weeks ago on flat files and why embedded software application developers readily adopted them. Then in the next blog, I covered why embedded developers are reluctant to use databases. In this third installment, I’d like to discuss why they should consider moving away from flat files – after all, the old adage should apply here: “If it ain’t broke, don’t fix it” should apply before we discuss why one option is better than another.

Are You Suffering From “Who Moved My Cheese” Syndrome?

In most cases, change doesn’t happen in ways that we immediately recognize and feel compelled to react to. This is certainly the case with edge intelligence and the factors that are driving it.

If considered individually, changes like the increase in capabilities when going from 32-bit to 64-bit processors, or the increase in speed and size of DRAM, ability to use more sophisticated tools and operating environments by a wider community of developers and data scientists, as well as the ability to leverage more complex algorithms capable of implementing machine learning, don’t dictate the need to move from flat files to some other edge data management system.

However, the drive for change comes from what external demands could be met by the confluence of these changes unleashing a myriad of new opportunities for the business side to automate and improve decision-making at the point of action – at the edge.

So, what does “Who Moved My Cheese” syndrome have to do with all these new opportunities compelling developers using flat files to change? Simple: flat files can still do the job – just not that well – and that’s what creates an opening to fight the change.

In general, when those with real expertise use a tried and proven methodology that is still capable of somehow sub-optimally meet a new requirement, it’s human nature to want to justify and force continued use of that sub-optimal methodology. Let’s look at why flat files are not optimal in handling this confluence of new compute resources and the desire to leverage them for the coming fusion of the Industrial Internet of Things (IIoT) and Artificial Intelligence (AI).

It May be Smelly Cheese to You, But it’s My Favorite Perfume!

Flat files are simple to use, reliable and cost nothing as they come bundled with the underlying operating system, so it’s no wonder that there is such widespread adoption. However, the move from siloed, low data rate and simple processing to hyper-connected IIoT with AI at the edge mean flat files won’t pass the sniff test for use going forward for the following three reasons:

- The Increasing Demand for Edge Intelligence and in Particular, IIoT Will Drive the Need for Distributed Data Management and Not Just Simple Local Data Storage and Retrieval

File systems are really about data storage – the foundation level of data management but not comprehensive data management in and of itself. And while state-of-the-art file systems often include replication, defragmentation, encryption, and other key modern data management features, they don’t replace content management systems let alone records management or database systems that cover more advanced features including built-in indexing, filtering, sophisticated query, client-server, peer-to-peer, and other key functionality needed for Edge data management in IoT use cases. - Modern Edge Intelligence Needs Support for In-Line Analytics Based on More Than Just the Locally Sourced Data Streams

Edge data processing and analytics have largely been confined to simple data processing on a single data stream and data type being processed as a time-series data set with temporal filtering for better signal-to-noise ratio (SNR) or to throw out data that has not veered away from some unremarkable threshold. Going forward, there will be multiple data streams and data types with baseline patterns that are referenced, correlated, with machine learning algorithms applied. These more sophisticated approaches may require data from neighboring devices, upstream data from systems as far away as ERP systems in the data center. Built-in functionality for joins across multiple tables, ability to handle streaming of different data types, publish and subscribe for peer-to-peer and client-server. These requirements are far more sophisticated and are not easily built from scratch the way simple indexing, sorting, and other typical flat-file DIY add-ons have been crafted in the past. With a modern edge data management system, all this functionality is hyper-connected, and interoperability with streaming data standards like Kafka or Spark are a given. - The Machine Learning (ML) Lifecycle, Reporting, and Visualization Tools Need Plug-and-play Retrieval Based on Industry Standards Over and Above Those for File Systems

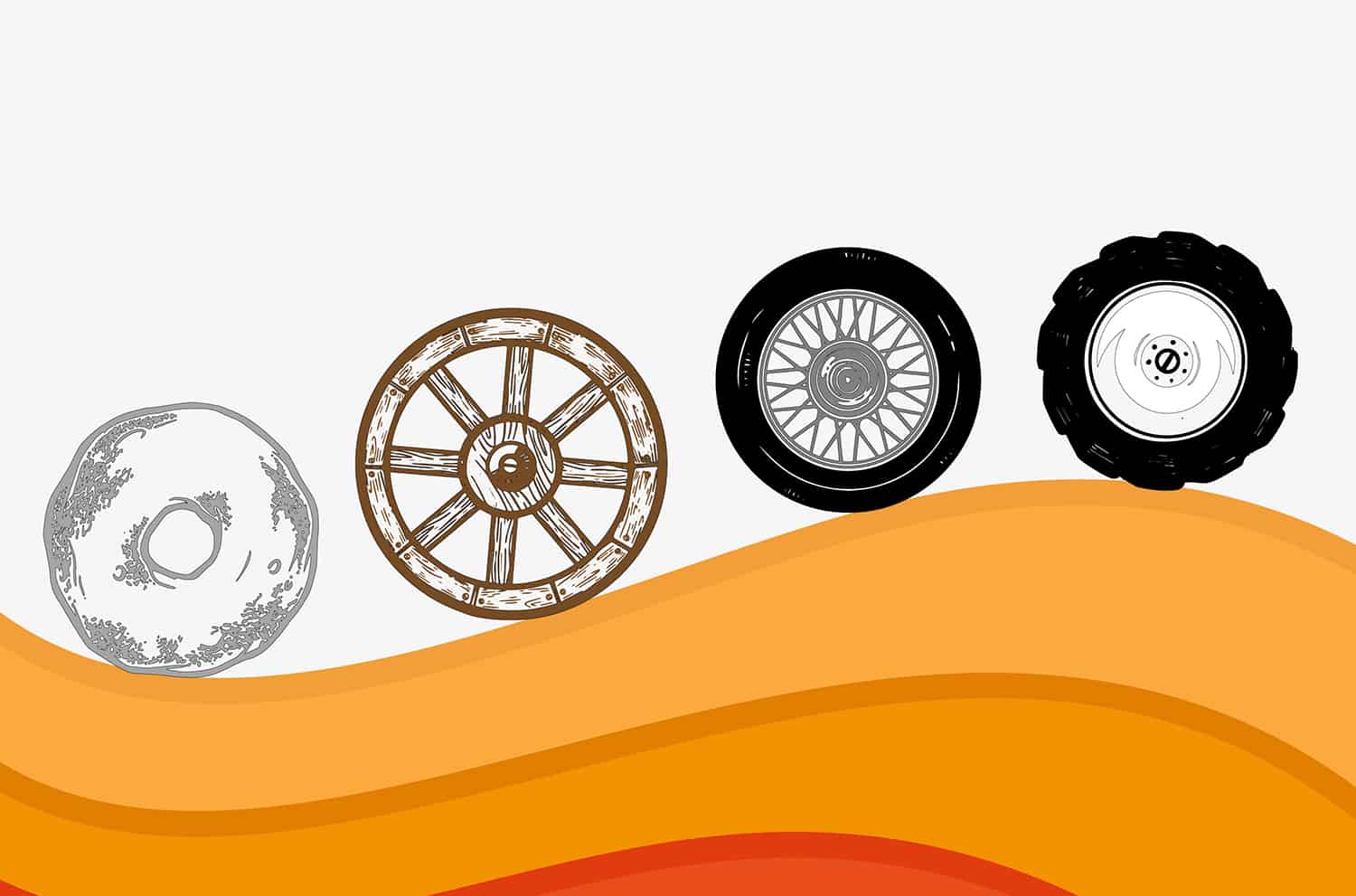

It’s not just a matter of reinventing the wheel for local functionality for inline analytics to support ML; it’s also the fact that ML has a lifecycle that includes training algorithms with datasets from the edge. After training, the algorithms are then deployed unsupervised at the edge to perform ML Inference on new data. Reporting and visualization are critical in the analysis of how well the ML is working and are you getting the expected business outcomes. Again, modern edge data management systems have built-in functionality to help with these tools and in support of these objectives.

Granted, in all cases, file systems are still needed. Most databases, historians, or other homegrown edge data management systems always leverage file systems for data storage. Also, with enough brute force and sweat, you can always reinvent the wheel with added logic to support any functionality found in something off-the-shelf. The problems in taking a flat-file approach are opportunity costs, reduced speed of innovation, and fit-for-purpose deficiencies created by the need to build a much bigger, far more sophisticated wheel. In the next segment we’ll review exactly what’s needed out-of-the-box in a modern edge data management system.

Actian is the industry leader in operational data warehouse and edge data management solutions for modern businesses. With a complete set of connected solutions to help you manage data on-premises, in the cloud, and at the edge with mobile and IoT. Actian can help you develop the technical foundation needed to support true business agility. To learn more, visit www.actian.com.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.